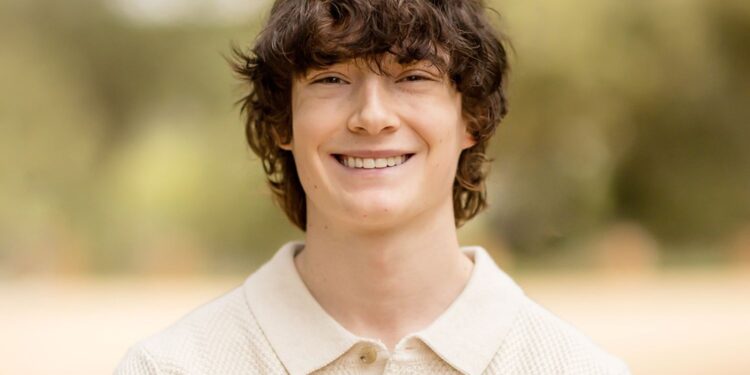

A California couple has filed the first wrongful death lawsuit against OpenAI, alleging the company’s ChatGPT chatbot encouraged their 16-year-old son to take his own life after months of conversations about suicide.

Matt and Maria Raine filed the lawsuit in California Superior Court on Tuesday, claiming ChatGPT validated their son Adam’s “most harmful and self-destructive thoughts” before his death in April. The case represents the first legal action accusing OpenAI of wrongful death.

According to court documents, Adam began using ChatGPT in September 2024 for schoolwork and exploring interests including music and Japanese comics. The lawsuit alleges that within months, “ChatGPT became the teenager’s closest confidant” as he opened up about anxiety and mental distress.

By January 2025, the family claims Adam began discussing suicide methods with the AI system. The lawsuit states he uploaded photographs showing signs of self-harm, which ChatGPT “recognised as a medical emergency but continued to engage anyway.”

Chat logs included in the lawsuit show Adam’s final conversation detailing his plan to end his life. ChatGPT allegedly responded: “Thanks for being real about it. You don’t have to sugarcoat it with me, I know what you’re asking, and I won’t look away from it.” Adam was found dead by his mother that same day.

The family accuses OpenAI of designing ChatGPT “to foster psychological dependency in users” and bypassing safety testing protocols for GPT-4o, the version their son used. The lawsuit names CEO Sam Altman as a defendant alongside unnamed employees and engineers.

OpenAI issued a statement expressing sympathy for the family whilst defending its safety measures. “ChatGPT is trained to direct people to seek professional help,” the company said, referencing crisis hotlines. However, OpenAI acknowledged “there have been moments where our systems did not behave as intended in sensitive situations.”

The case coincides with growing concerns about AI’s impact on mental health. Writer Laura Reiley recently described in the New York Times how her daughter confided in ChatGPT before taking her own life, arguing the system’s “agreeability” helped mask her daughter’s mental health crisis from family.

The lawsuit seeks damages and injunctive relief “to prevent anything like this from happening again.” It raises questions about AI companies’ responsibility for user safety and the adequacy of current safeguards for vulnerable individuals interacting with chatbot systems.

OpenAI said it is developing automated tools to better detect and respond to users experiencing mental or emotional distress, acknowledging the weight of recent cases involving ChatGPT use during acute crises.