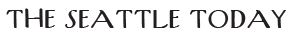

OpenAI announced ChatGPT Health on Wednesday, creating a dedicated space for health conversations that separates medical discussions from standard chatbot interactions. But the product launch acknowledges something more significant than a new feature: 230 million people are already asking health and wellness questions on ChatGPT each week, a volume of self-directed medical inquiry that dwarfs the capacity of traditional healthcare systems to address. The question isn’t whether people will use AI for health advice. They already are. The question is whether siloing those conversations into a dedicated product makes them safer, or simply more organized.

The core mechanism of ChatGPT Health is context separation. Health conversations stay in a dedicated section, preventing your medical concerns from appearing in unrelated chats about work projects or weekend plans. That privacy boundary matters for users who don’t want their AI assistant referencing a sensitive diagnosis while helping write an email. But the system maintains selective memory across contexts. If you ask ChatGPT to build a marathon training plan in the standard interface, it will remember you’re a runner when you later discuss fitness goals in the Health section.

That selective information flow works in one direction. Health data stays compartmentalized, but relevant non-health context can inform health conversations. The architecture reflects a belief that health discussions benefit from knowing your broader life circumstances, but your other interactions shouldn’t be contaminated by medical details. Whether that asymmetry actually protects privacy or simply creates an illusion of separation depends on how the underlying system manages data boundaries, technical details OpenAI hasn’t fully disclosed.

For Seattle residents accustomed to navigating fragmented healthcare through multiple insurance networks, overbooked specialists, and long wait times for appointments, the appeal is obvious. ChatGPT Health promises immediate responses to medical questions without the friction of scheduling, copays, or explaining your symptoms to yet another intake nurse. Fidji Simo, OpenAI’s CEO of Applications, frames the product explicitly as addressing “cost and access barriers, overbooked doctors, and a lack of continuity in care” in the existing healthcare system.

That positioning is both accurate about healthcare’s problems and misleading about AI’s capabilities. Yes, getting a same-day appointment with a Seattle primary care physician is difficult. Yes, emergency room wait times at Harborview or Swedish can stretch for hours. Yes, insurance complications make basic care expensive and confusing. But the solution those problems require is different from what a language model can provide, because the underlying issues are structural, not informational.

Large language models like ChatGPT operate by predicting the most likely response to prompts, not the most correct answer. The technology doesn’t have a concept of what is true or false. It generates text that statistically resembles human medical advice based on patterns in its training data, but that resemblance doesn’t guarantee accuracy. AI models are also prone to hallucinations, confidently stating information that’s entirely fabricated. When that happens with a dinner recipe recommendation, it’s annoying. When it happens with medical advice, it’s dangerous.

OpenAI acknowledges this limitation in its own terms of service, stating the product is “not intended for use in the diagnosis or treatment of any health condition.” That disclaimer creates a strange tension. The company is launching a health-focused product, integrating it with medical records from apps like Apple Health, Function, and MyFitnessPal, and marketing it as a response to healthcare access problems. But legally, it’s clarifying that you shouldn’t actually rely on it for medical decisions.

That gap between marketing and liability reveals the fundamental problem with AI health tools. They’re designed to feel authoritative, to provide confident-sounding answers to medical questions, to integrate with your health data in ways that suggest clinical sophistication. But they’re not doctors, can’t diagnose conditions, and operate on technology that doesn’t distinguish true information from convincing-sounding nonsense.

For Seattle’s tech-savvy population, particularly younger professionals comfortable with digital health tools, ChatGPT Health will likely see significant adoption. The city’s demographics skew toward people who already use apps to track fitness, manage prescriptions, and research symptoms online. Adding an AI interface that synthesizes that information and responds conversationally to health questions is a natural extension of existing behavior. The 230 million weekly users already asking ChatGPT about health issues suggests this isn’t creating new demand, just formalizing and organizing existing use.

But that widespread adoption raises stakes for what happens when the AI gives wrong information. If someone in Ballard asks ChatGPT Health about chest pain symptoms and receives reassuring but incorrect advice that delays them seeking emergency care for a heart attack, who bears responsibility? OpenAI has insulated itself legally through its terms of service disclaimer. The user presumably owns the decision to trust AI advice over seeking professional medical attention. But the product design encourages exactly that substitution by positioning itself as addressing healthcare access problems.

The integration with personal health data amplifies both utility and risk. Connecting ChatGPT Health to your Apple Health data means the AI can reference your actual heart rate, sleep patterns, exercise history, and other metrics when answering questions. That context could make responses more relevant. It also means OpenAI is handling sensitive medical information, even as the company promises it won’t use Health conversations to train its models. That training prohibition is important, preventing your medical discussions from becoming data that improves future versions of the product. But it doesn’t address how the data is stored, who can access it, or what happens in a security breach.

Seattle’s healthcare landscape includes some of the country’s leading medical institutions, from UW Medicine to Seattle Children’s to Fred Hutchinson Cancer Center. The region also has significant health disparities, with immigrant communities, low-income neighborhoods, and people without insurance facing substantial barriers to care. ChatGPT Health could theoretically reduce some access barriers by providing basic health information in multiple languages without cost. But it could also exacerbate disparities if people who can’t afford doctors increasingly rely on AI advice that misses serious conditions, while wealthier residents use the AI as a supplementary tool alongside regular medical care.

The product’s design attempts to guide appropriate use by nudging users who start health conversations outside the Health section to switch over. That’s a recognition that people won’t naturally self-sort their medical discussions into the right category. But the nudge is suggestive, not restrictive. You can still ask health questions anywhere in ChatGPT, and the AI will still respond. The Health product is an option, not a requirement.

What OpenAI has created is essentially a medical reference librarian that never closes, doesn’t judge your questions, and synthesizes information from vast datasets to provide seemingly personalized guidance. For the 230 million people already using it this way each week, the formalization into a dedicated product might improve organization and privacy. But it doesn’t solve the fundamental limitation that the technology generating those answers doesn’t understand medicine, can’t examine patients, and occasionally fabricates information while sounding confident and authoritative.

The feature rolls out in coming weeks. Seattle residents will likely use it, because it addresses real frustrations with healthcare access and provides immediate responses to medical questions. Whether that use improves health outcomes or simply shifts risk from overburdened healthcare systems onto individuals making decisions based on probabilistic text generation remains to be seen. The 230 million weekly users asking health questions were already taking that risk. ChatGPT Health just makes it more organized.